Apache Kafka

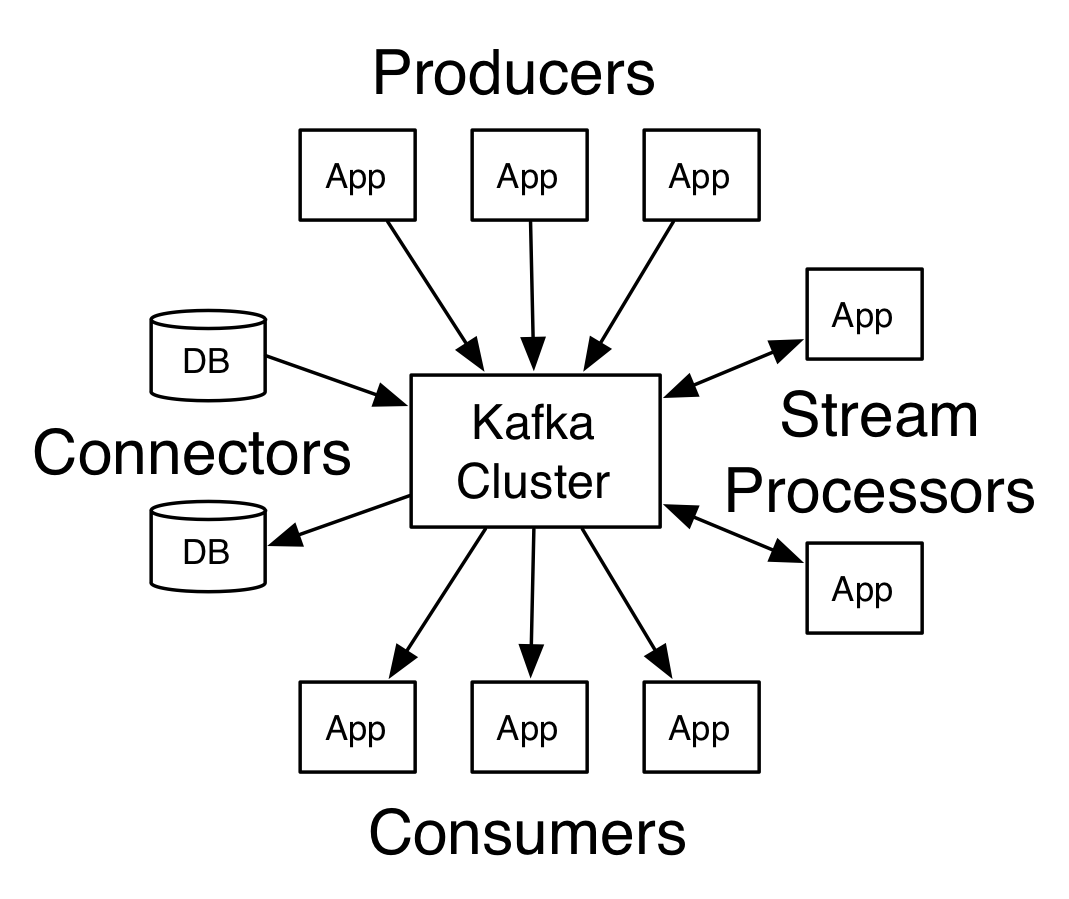

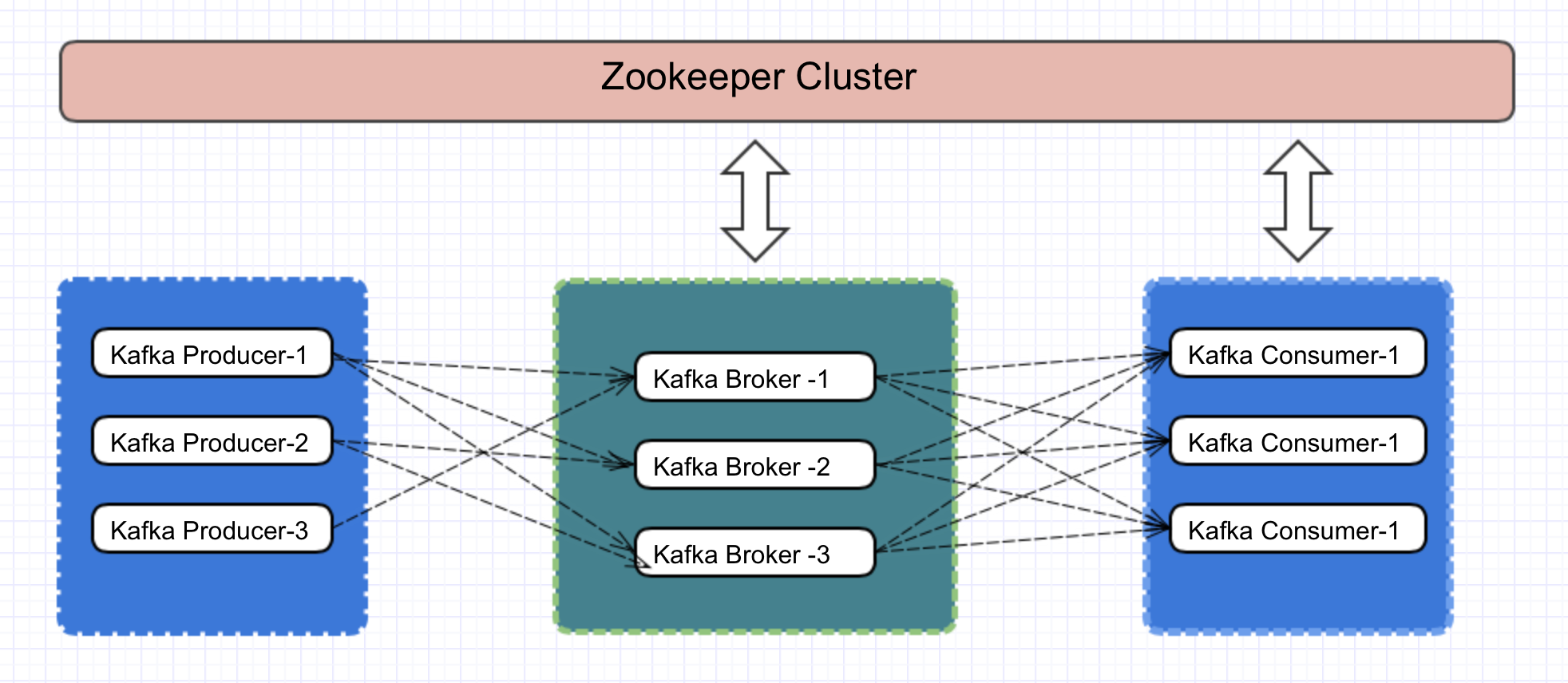

Apache Kafka is a distributed publish-subscribe based fault tolerant messaging system It is used in real-time streaming data architectures to provide real-time analytics and to get data between systems or applications and It uses Zookeeper to track status of kafka cluster nodes.

We can run the kafka in a single node broker or in a cluster mode with multiple nodes.

Topics :

- A Topic is a category/feed name to which messages are stored and published.

- All Kafka messages are organized into topics.

- We can send a message/record to specific topic and we can read a message/data from the topic name.

- Producer applications write data to topics and consumer applications read from topics.

- Published messages will be stay in the kafka cluster untill a configurable retention period has passed by.

Producers :

- The application which sends the messages to kafka system.

- The published data will send to specific topic.

Consumers :

- The application which read/consume the data from the specific topic in the kafka system.

Broker :

- Every instance of Kafka that is responsible for message exchange is called a Broker

- Kafka can be used as a stand-alone machine or a part of a cluster.

- Kafka brokers are stateless, so they use ZooKeeper for maintaining their cluster state

- One Kafka broker instance can handle hundreds of thousands of reads and writes per second

- Kafka broker leader election can be done by ZooKeeper.

Zookeeper

ZooKeeper is used for managing and coordinating Kafka broker, it service is mainly used to notify producer and consumer about the presence of any new broker in the Kafka system or failure of the broker in the Kafka system. We can use the zookeeper which is available in the apache kafka.

Downloading the Kafka and Zookeeper file :

#Open the Terminal and Run the below commands

cd /Users/kiran/Desktop/medium/ #go to the path where you want to download

mkdir kafka

cd kafka

curl -k https://archive.apache.org/dist/kafka/0.10.0.0/kafka_2.11-0.10.0.0.tgz > kafka_2.11–0.10.0.0.tgz

tar -xzf kafka_2.11–0.10.0.0.tgz

cd kafka_2.11–0.10.0.0

Zookeeper Configuration :

We are going to create 3 zookeeper instance in the same node/system.

- Creating the Zookeeper properties files

cd /Users/kiran/Desktop/medium/kafka/kafka-2.0.0-src/config #go to the path where we downloaded the the Kafka

mv zookeeper1.properties zookeeper1.properties

cp zookeeper1.properties zookeeper2.properties

cp zookeeper1.properties zookeeper3.properties

2. Create the data directry for all 3 zookeeper instance to store the data

mkdir -p /Users/kiran/Desktop/medium/kafka/data/zookeeper1

mkdir -p /Users/kiran/Desktop/medium/kafka/data/zookeeper2

mkdir -p /Users/kiran/Desktop/medium/kafka/data/zookeeper3

3. Creating the unique id for each zookeeper instance

echo 1 > /Users/kiran/Desktop/medium/kafka/data/zookeeper3/myid

echo 2 > /Users/kiran/Desktop/medium/kafka/data/zookeeper3/myid

echo 3 > /Users/kiran/Desktop/medium/kafka/data/zookeeper3/myid

4. We have the zookeeper properties config file for 3 instances as below .

- Zookeeper-Instance-1 : zookeeper1.properties

vi zookeeper1.properties (add the below configuration scripts)

dataDir=/Users/kiran/Desktop/medium/kafka/data/zookeeper1

clientPort=2181

a non-production config

tickTime=2000

initLimit=5

syncLimit=2

server.1=localhost:2666:3666

server.2=localhost:2667:3667

server.3=localhost:2668:3668

maxClientCnxns=0

- Zookeeper-Instance-2 : zookeeper2.properties

vi zookeeper2.properties (add the below configuration scripts)

dataDir=/Users/kiran/Desktop/medium/kafka/data/zookeeper2

clientPort=2182

a non-production config

tickTime=2000

initLimit=5

syncLimit=2

server.1=localhost:2666:3666

server.2=localhost:2667:3667

server.3=localhost:2668:3668

- Zookeeper-Instance-3 : zookeeper3.properties

vi zookeeper2.properties (add the below configuration scripts)

dataDir=/Users/kiran/Desktop/medium/kafka/data/zookeeper3

clientPort=2183

a non-production config

tickTime=2000

initLimit=5

syncLimit=2

server.1=localhost:2666:3666

server.2=localhost:2667:3667

server.3=localhost:2668:3668

maxClientCnxns=0

5. Running the zookeeper instances :

#open new terminal

cd /Users/kiran/Desktop/medium/kafka/kafka_2.11–0.10.0.0

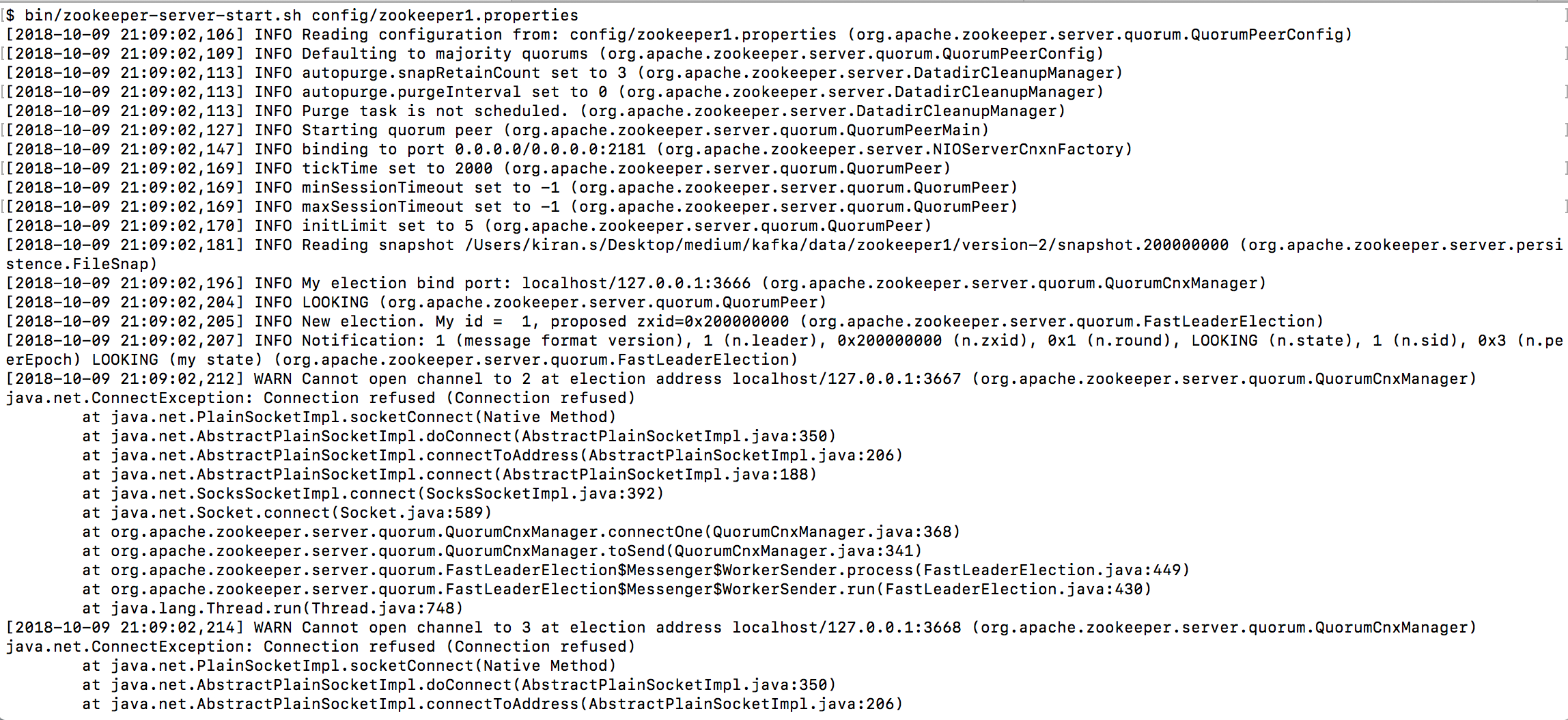

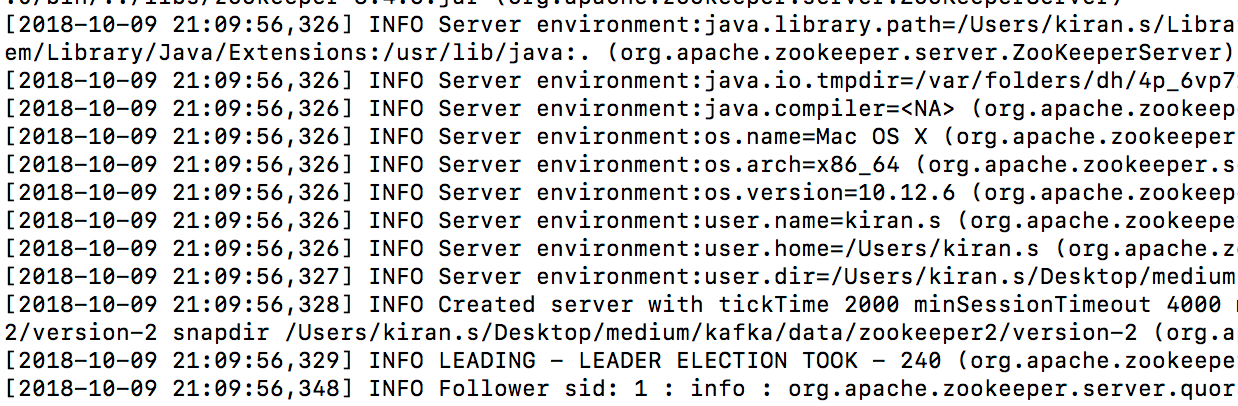

bin/zookeeper-server-start.sh config/zookeeper1.properties

#open new terminal

cd /Users/kiran/Desktop/medium/kafka/kafka_2.11–0.10.0.0

bin/zookeeper-server-start.sh config/zookeeper2.properties

#open new terminal

cd /Users/kiran/Desktop/medium/kafka/kafka_2.11–0.10.0.0

bin/zookeeper-server-start.sh config/zookeeper3.properties

6. Make sure all the Three zookeeper instances are running as shown in the below screenshots .

Kafka Broker Configuration :

We are going to create 3 Kafka broker Instance in the same node/system.

- Creating the Server properties files

cd /Users/kiran/Desktop/medium/kafka/kafka-2.0.0-src/config

mv server.properties server1.properties

cp server1.properties server2.properties

cp server1.properties server3.properties

2. We have the zookeeper properties config file for 3 instances

- Kafka Broker Instance 1 : server1.properties

vi server1.properties (update the below configuration scripts)

broker.id=0

log.dirs=/tmp/kafka-logs-1

port=9093

advertised.host.name = localhost

zookeeper.connect=localhost:2181,localhost:2182,localhost:2183

- Kafka Broker Instance 2 : server2.properties

vi server2.properties (update the below configuration scripts)

broker.id=1

log.dirs=/tmp/kafka-logs-2

port=9094

advertised.host.name = localhost

zookeeper.connect=localhost:2181,localhost:2182,localhost:2183

- Kafka Broker Instance 3 : server3.properties

vi server3.properties (update the below configuration scripts)

broker.id=2

log.dirs=/tmp/kafka-logs-3

port=9095

advertised.host.name = localhost

zookeeper.connect=localhost:2181,localhost:2182,localhost:2183

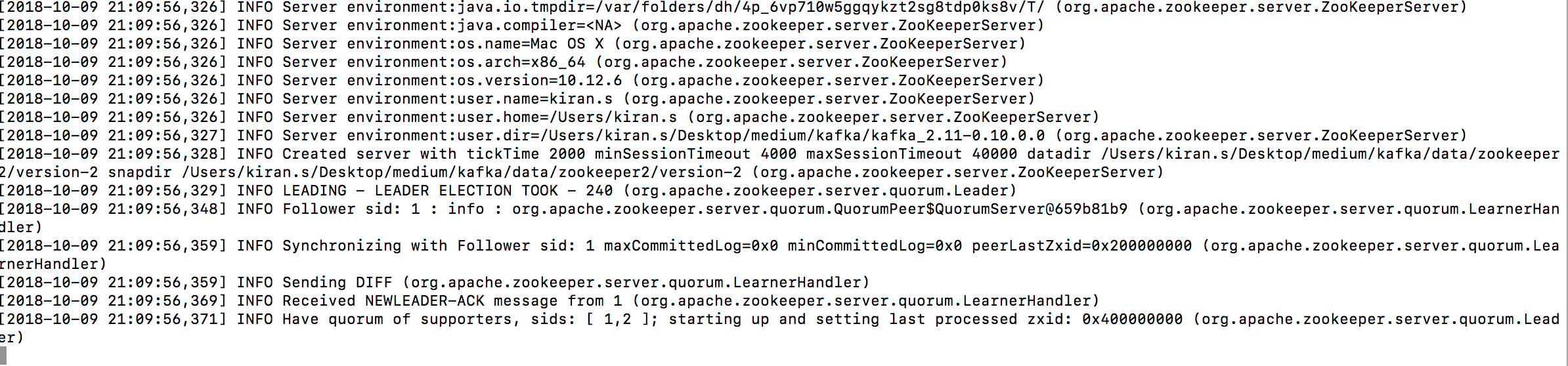

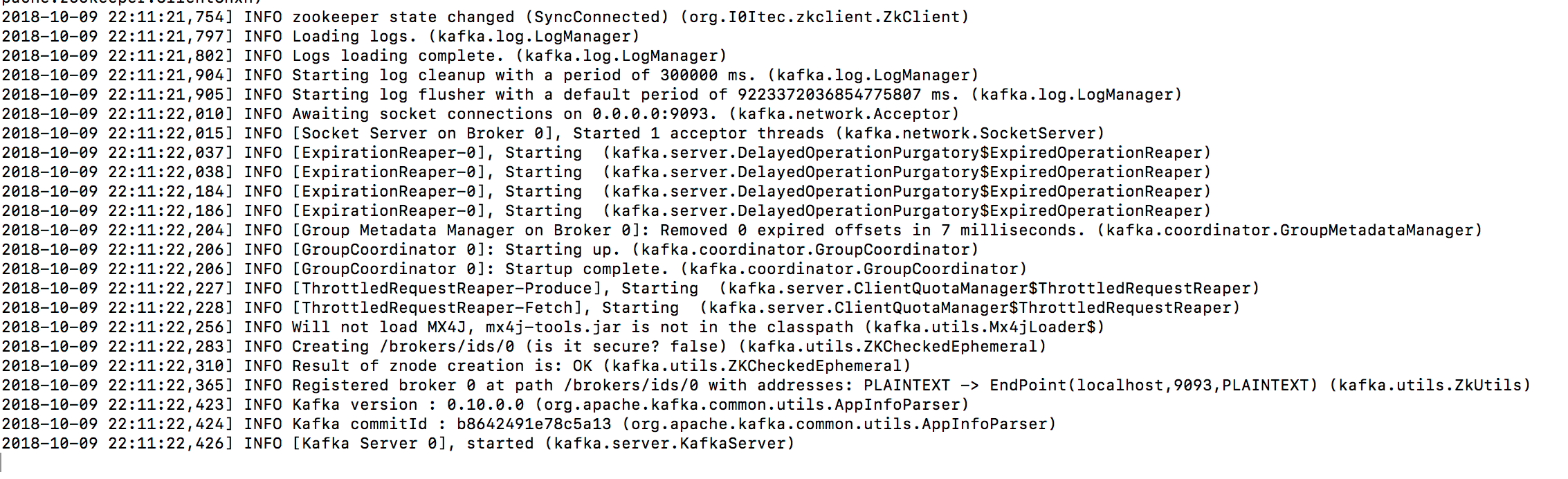

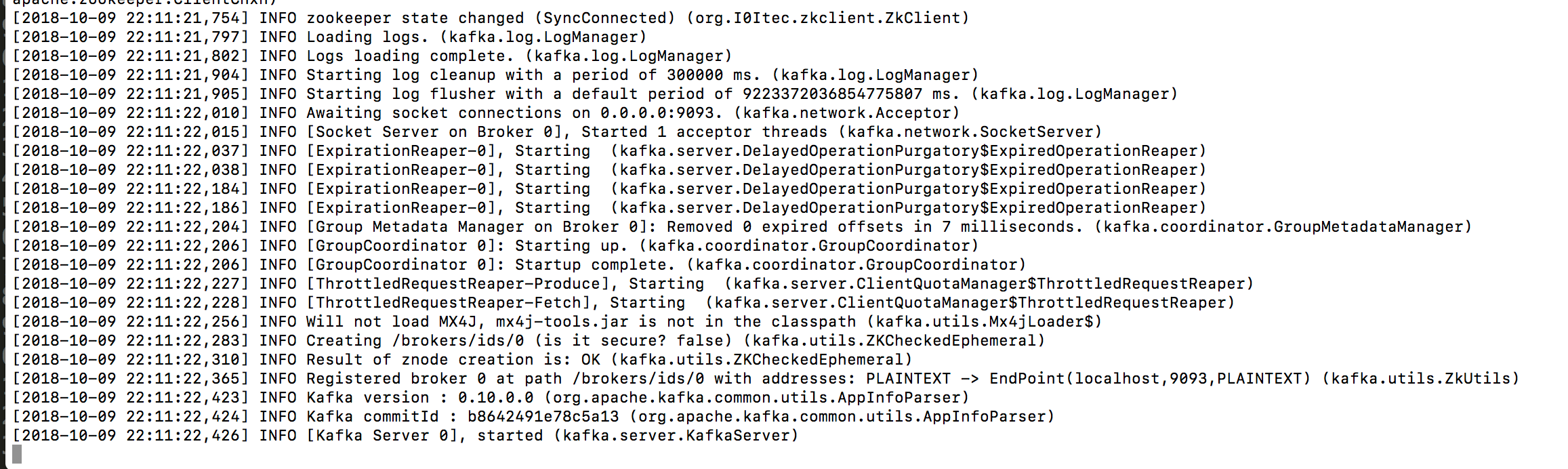

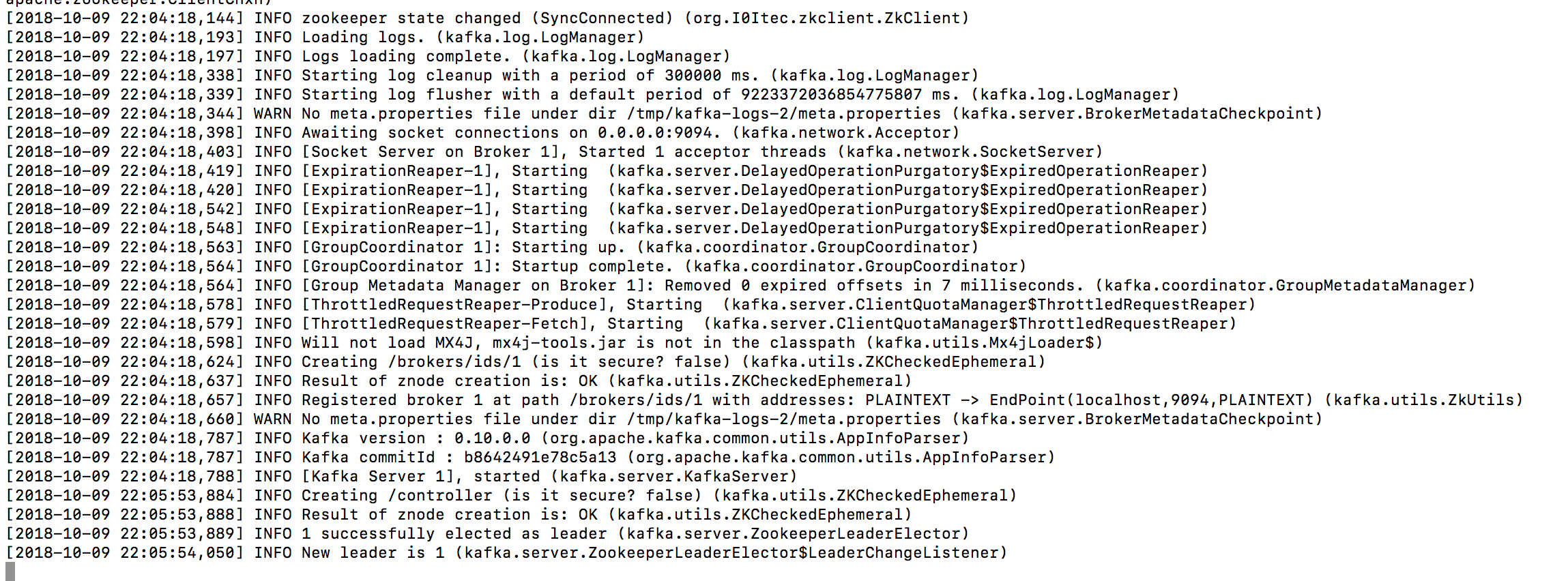

3.Running the Kafka Breoker Instances :

#open new terminal

cd /Users/kiran/Desktop/medium/kafka/kafka_2.11–0.10.0.0

bin/kafka-server-start.sh config/server1.properties

#open new terminal

cd /Users/kiran/Desktop/medium/kafka/kafka_2.11–0.10.0.0

bin/kafka-server-start.sh config/server2.properties

#open new terminal

cd /Users/kiran/Desktop/medium/kafka/kafka_2.11–0.10.0.0

bin/kafka-server-start.sh config/server3.properties

4. Make sure all the Three kafka broker instances are running as shown in the below screenshots .

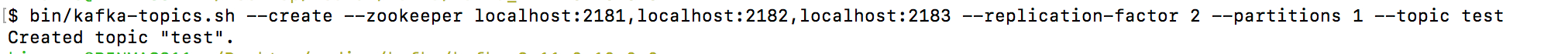

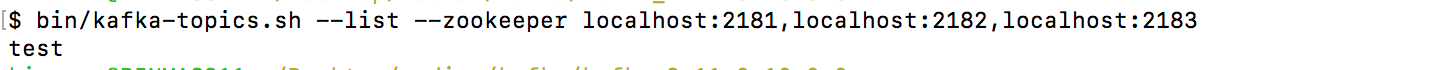

5. Creating a new Topic

Let’s create a topic named “test” with a single partition and only two replica.

#open new terminal and execute the script

bin/kafka-topics.sh — create — zookeeper localhost:2181,localhost:2182,localhost:2183 — replication-factor 2 — partitions 1 — topic test

6. Listing the topic which are created in the zookeeper

#open new terminal and execute the script

bin/kafka-topics.sh — list — zookeeper localhost:2181,localhost:2182,localhost:2183

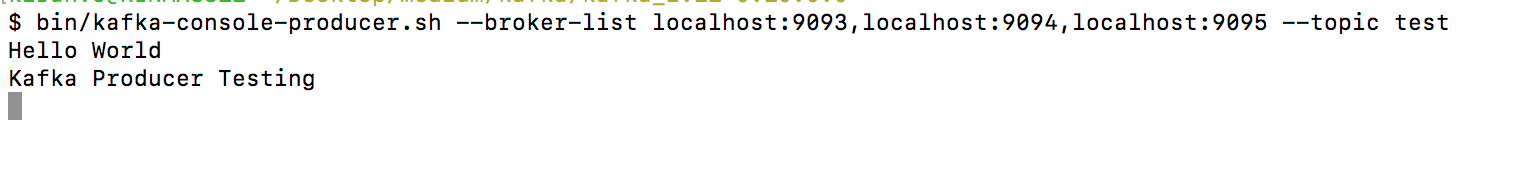

7. Running the Kafka Producer

The Producer can send messages to test topic by typing the messages in the console/terminal

#open new terminal

bin/kafka-console-producer.sh — broker-list localhost:9093,localhost:9094,localhost:9095 — topic test

Hello World #Enter the message you want to send to kafka topic

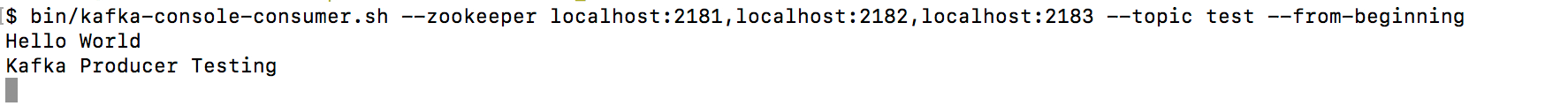

8. Running the Kafka Consumer.

The Consumer will display the data in the terminal/console as soon as publisher sents the data to the topic.

#open new terminal

bin/kafka-console-consumer.sh — zookeeper localhost:2181,localhost:2182,localhost:2183 — topic test — from-beginning